What is Neural Network Algorithms & How it Works in Artificial Intelligence?

Neural networks are one of the learning algorithms used within machine learning. They consist of different layers for analyzing and learning data.

|

| Copyright @AIMagnus |

Artificial Neural Network Algorithms

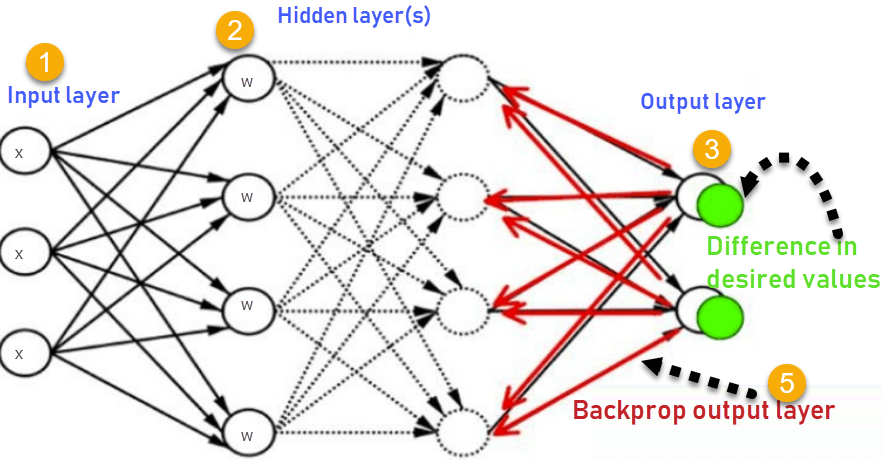

Artificial Neural Network algorithms are inspired by the human brain. The artificial neurons are interconnected and communicate with each other. Each connection is weighted by previous learning events and with each new input of data more learning takes place. A lot of different algorithms are associated with Artificial Neural Networks and one of the most important is Deep learning. An example of Deep Learning can be seen in the picture above. It is especially concerned with building much larger complex neural networks.

Dimensionality Analysis Algorithms

Dimensionality is about the amount of variables in the data and the dimensions they belong to. This type of analysis is aimed at reducing the amount of dimensions with the associated variables while at the same time retaining the same information. In other words it seeks to remove the less meaningful data while at the same time ensuring the same end result.

|

| Copyright @AIMagnus |

Artificial Neural Networks – What Is It

AILabPage defines – Artificial neural networks (ANNs) as “Biologically inspired computing code with the number of simple, highly interconnected processing elements for simulating (only an attempt) human brain working & to process information model”. It’s way different than computer program though. There are several kinds of Neural Networks in deep learning. Neural networks consist of input and output layers and at least one hidden layer.

· Multi-Layer Perceptron

· Radial Basis Network

· Recurrent Neural Networks

· Generative Adversarial Networks

· Convolutional Neural Networks.

Neural network based on radial basis function with can be used for strategic reasons. There are several other models of the neural network including what we have mentioned above. For an introduction to the neural network and their working model continue reading this post. You will get a sense of how they work and used for real mathematical problems.

ANN’s learns, get trained and adjust automatically like we humans do. Though ANN’s are inspired by the human brain but for a fact they run on a far simpler plane. The structure of neurons is now used for machine learning thus called as artificial learning. This development has helped various problems to come to an end especially where layering is needed for refinement and granular details are needed.

Neural Network Architecture

Neural networks consist of input, output layers hidden layers. Transformation of input into valuable output unit is the main job. They are excellent examples of mathematical constructs. Information flows in neural network happens in two ways.

· Feedforward Networks – In these signals only travel in one direction without any loop i.e. towards the output layer. Extensively used in pattern recognition. This network with a single input layer and a single output layer can have zero or multiple hidden layers though. This method has two common designs as below

o At the time of it’s learning or “being trained”

o At the time of operating normally or “after being trained”

· Feedback Networks – In this recurrent or interactive networks can use their internal state (memory) to process sequences of inputs. Signals can travel in both directions with loops in the network. As of now limited to time series/sequential tasks. Typical human brain model.

Architectural Components

· Input Layers, Neurons, and Weights – The basic unit in a neural network is called as the neuron or node. These units receive input from the external source or some other nodes. The idea here is to compute an output based associated weight. Weights to the neuron are assigned based on its relative importance compared with other inputs. Now finally function is applied to this for computations.

o Let’s assume our task to it to make tea so our ingredients will represent the “neurons” or input neurons as these are building blocks or starting points. The amount of each ingredient is called a “weight.” After dumping tea, sugar, species, milk and water in a pan and then mixing will transform it another state and colour. This process of transformation can be called an “activation function”.

· Hidden Layers and Output Layers – The hidden layer is always isolated from the external world hence its called as hidden. The main job of the hidden layer to take inputs from the input layer and perform its job i.e calculation and transform the result to output nodes. Bunch of hidden nodes can be called a hidden layer.

o Continuing the same example above – In our tea making task, now using the mixture of our ingredients coming out of the input layer, the solution upon heating (computation process) starts changing colour. The layers made up by the intermediate products are called “hidden layers”. Heating can be compared with the activation process at the end we get our final tea as output.

The network described here is much simpler for ease of understanding compared to the one you will find in real life. All computations in the forward propagation step and backpropagation step are done in the same way (at each node) as discussed before.

|

| Copyright @AIMagnus |

Neural Network Work Flow – Layers of Learning

Neural networks learning process is not very different from humans, humans learns from experience in lives while neural networks require data to gain experience and learn. Accuracy increases with the amount of data over time. Similarly, humans also perform the same task better and better by doing any task you do over and over.

The underlying foundation of neural networks is a layer and layers of connections. The entire neural network model is based on a layered architecture. Each layer has its own responsibility. These networks are designed to make use of layers of “neurons” to process raw data, find patterns into it and objects which are usually hidden to naked eyes. To train a neural network, data scientist put their data in three different baskets.

· Training data set – This helps networks to understand and know the various weights between nodes.

· Validation data set – To fine-tune the data sets.

· Test data set – To evaluate the accuracy and records margin of error.

Layer takes input, extract feature and feed into the next layer i.e. each layer work as an input layer to another layer. This is to receive information and last layer job is to throw output of the required information. Hidden layers or core layers process all the information in between.

· Assign a random weight to all the links to start the algorithm.

· Find links the activation rate of all hidden nodes by using the input and links.

· Find the activation rate of output nodes with the activation rate of hidden nodes and link to output.

· Errors are discovered at the output node and to recalibrate all the links between hidden & output nodes.

· Using the weights and error at the output; cascade down errors to hidden & output nodes. Weights get applied on connections as the best friend for neural networks.

· Recalibrate & repeat the process of weights between hidden and input nodes until the convergence criteria are met.

· Finally the output value of the predicted value or the sum of the three output values of each neuron. This is the output.

· Patterns of information are fed into the network via the input units, which trigger the layers of hidden units, and these, in turn, arrive at the output units.

Deep Learning’s most common model is “The 3-layer fully connected neural network”. This has become the foundation for most of the others. The backpropagation algorithm is commonly used for improving the performance of neural network prediction accuracy. It’s done by adjusting higher weight connections in an attempt to lower the cost function.

Behind The Scenes – Neural Networks Algorithms

There are many different algorithms used to train neural networks with too many variants. Let’s visualise an artificial neural network (ANN) to get some fair idea on how neural networks operate. By now we all know that there are three layers in the neural network.

· The input layer

· Hidden Layer

· The output layer

We outline a few main algorithms with an overview to create our basic understanding and the big picture on behind the scene of this excellent networks. In neural networks almost every neuron influence and connected to each other as seen on the above picture. Below 5 methods are commonly used in neural networks.

· Feedforward algorithm

· Sigmoid – A common activation algorithm

· Cost function

· Backpropagation

· Gradient descent – Applying the learning rate

Recursive Neural Networks

Recursive Neural Networks – Call it as a deep tree-like structure. When the need is to parse a whole sentence we use a recursive neural network. Tree-like topology allows branching connections and hierarchical structure. Arguments here can be how recursive neural networks are different than recurrent neural networks?

· Questions – How recursive neural networks are different than recurrent neural networks?

· Answer – Recurrent neural networks are in fact recursive neural networks with a particular structure: that of a linear chain.

RNNs are hierarchical kind of network with no time aspect to the input sequence but the input has to be processed hierarchically in a tree fashion.

Recurrent Neural Networks

Recurrent Neural Networks – Call it as a deep tree-like structure. These neural networks are used to understand the context in speech, text or music. The RNN allows information to loop through the network. Tree-like topology allows branching connections and hierarchical structure. In RNNs data flow is in multiple directions. These networks are employed for highly complex tasks i.e voice recognition, handwriting and language recognition etc.

RNNs abilities are quite a limitless. Don’t get lost between Recursive and Recurrent NNs. ANN’s structure is what enables artificial intelligence, machine learning and supercomputing to flourish. Neural networks are used for language translation, face recognition, picture captioning, text summarization and lot more tasks.

Convolutional Neural Networks

Convolutional Neural Networks (CNNs) is an excellent tool and one of the most advanced achievements in deep learning. CNNs got too much attention and focus from all major business players because of the hype of AI. The two core concepts of convolutional neural networks are convolution (hence the name) and pooling. It does this job at the backend with many layers transferring information in a sequence from one to another.

The human brain detects any image in fractions of seconds without much of efforts but computer vision the image is really just an array of numbers. In that array, each cell value represents the brightness of the pixel from black to white for a black and white image. Why do we need CNN’s and not just use feed-forward neural networks? How capsule networks can be used to overcome the shortcoming of CNN’s? etc.

I guess if you read this post on “Convolutional Neural Networks“; you will find out the answer.

Conclusion:

When to use artificial neural networks as oppose to traditional machine learning algorithms is a complex one to answer. It entirely depends upon on the problem in hand to solve. One needs to be patient and experienced enough to have the correct answer. All credits if any remains on the original contributor only. In the next upcoming post will talk about Recurrent Neural Networks in detail.

No comments: