Artificial intelligence AI. The history of development

Artificial Intelligence is a self-learning computer system designed to solve problems of a high class of complexity. It is able to solve managerial and computational problems, it is designed to control especially complex objects and systems (spacecraft, nuclear power plants, etc.).

The author of the term " Artificial Intelligence "

John McCarthy is renowned for developing the Lisp language and the founder of functional programming.

The concept of artificial intelligence is often correlated with the concept of robotics, although robots and AI in their understanding have different properties that characterize them. However, in higher technological development, robot and AI have a close functional relationship.

( "RUR" - a play written by Karel Czapek in 1920, with Czech Rossumovi Univerzalna Roboti in translation "Rossum Universal Robots" )

Artificial intelligence is a general concept

In the broadest sense, AI refers to the field of information technology, which develops machines (systems) endowed with the basic capabilities of human intelligence:

- Logical reasoning;

- Learning through the accumulation of knowledge and experience;

- Ability to apply accumulated knowledge to manage the environment;

- Adaptability.

In the process of working on AI, algorithms and programs are developed that solve problems in the same way as a person. At the first stage, such tasks were language processing and recognition of speech, text, images, then video and faces.

Now the range of tasks continues to evolve. These are unmanned vehicles, computer medical diagnostics, search engines, games, automated control systems for robots, and much more.

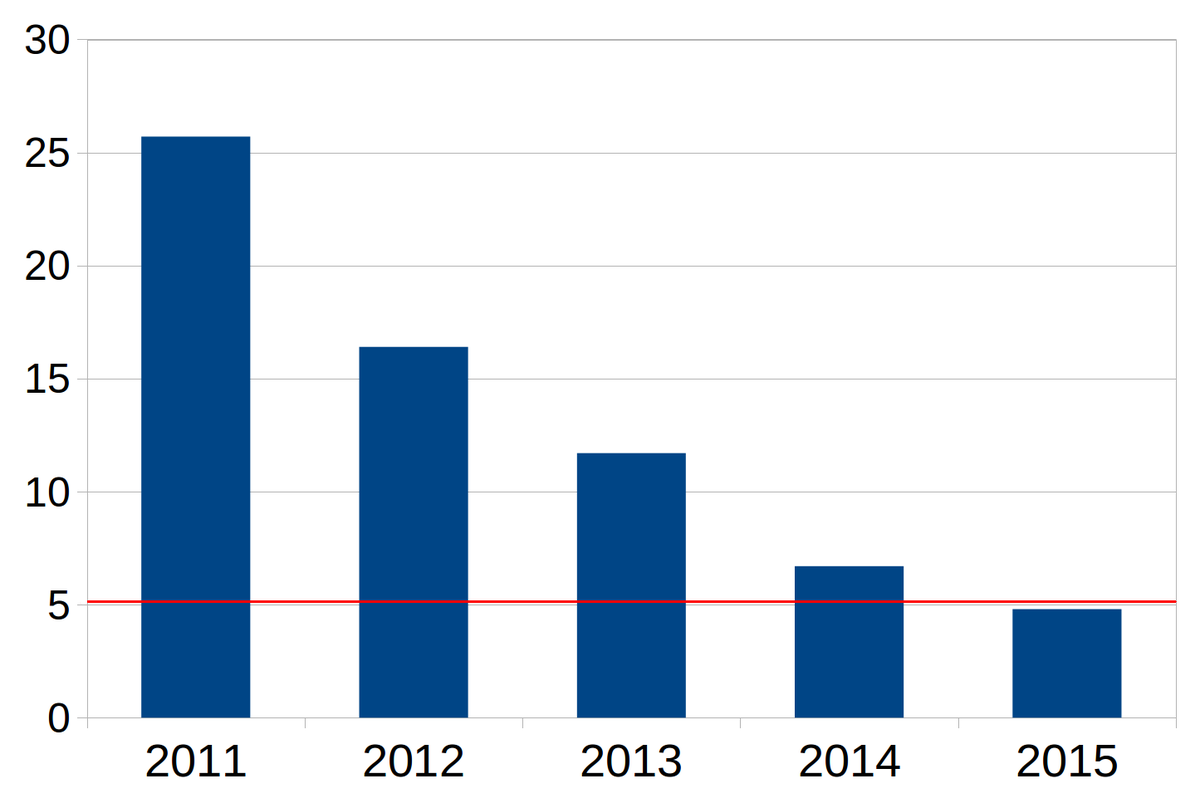

( Progress in machine image classification: error rate by years made by AI (%) and error rate of trained human annotator (5.1%) )

What is the abbreviation AI

Artificial intelligence (eng.) Is just translated as artificial intelligence. However, experts who use this abbreviation are talking about AI Effect, an effect that an evolving AI creates. As soon as an AI learns to do something new, a wave of controversy immediately rises that a new skill is not yet evidence of a machine (system) having thinking.

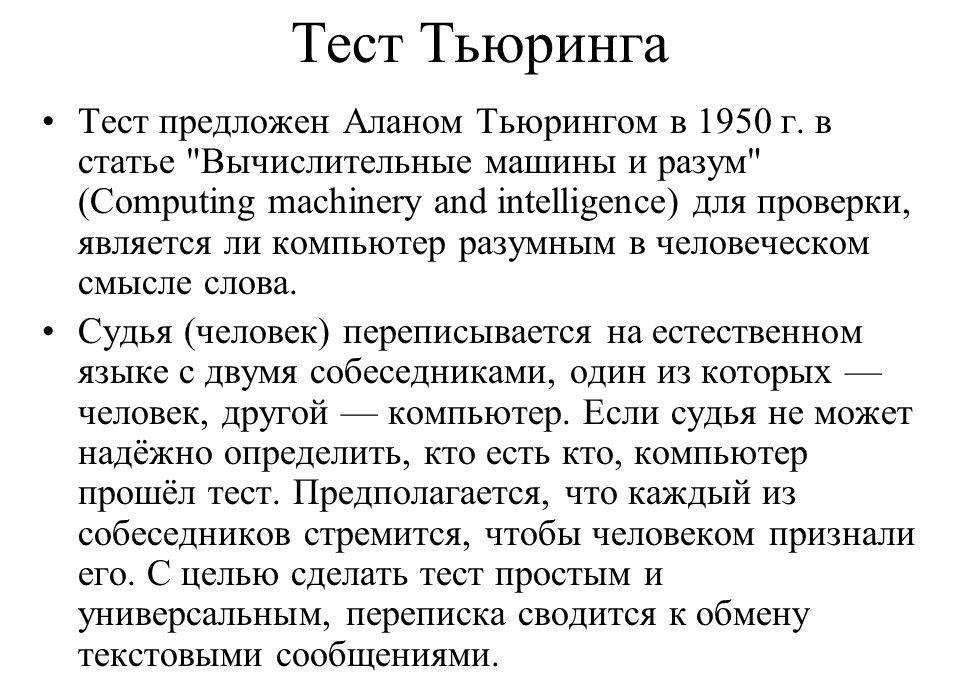

Turing test

The concept of the Turing test is closely related to the concept of artificial intelligence. In 1950, Alan Turing published an article "Can a Machine Think". It describes a simple procedure that allows you to determine with almost 100% accuracy how close the level of intelligence of a machine is to a person.

The essence of the test is that there is a computer and two people in three different rooms. One of them alternately communicates with both a person and a machine, not knowing which of them is who. At the end of the test, he must say with whom exactly he communicated in a given situation. The conversation is conducted in writing (usually in the form of a chat), all responses are sent after a specified period of time so that the speed of the response does not affect the person's decision.

History of Artificial Intelligence Research

The study of AI problems begins in the middle of the 20th century, taking shape as a separate science. Having found out that a machine can do all calculations much faster and more accurately, scientists realized how wide the possibilities of computers are. It was then that the question arose - can they reach the human level? The impetus for the development of AI is considered to be the creation of the first computers, which became the basis for research.

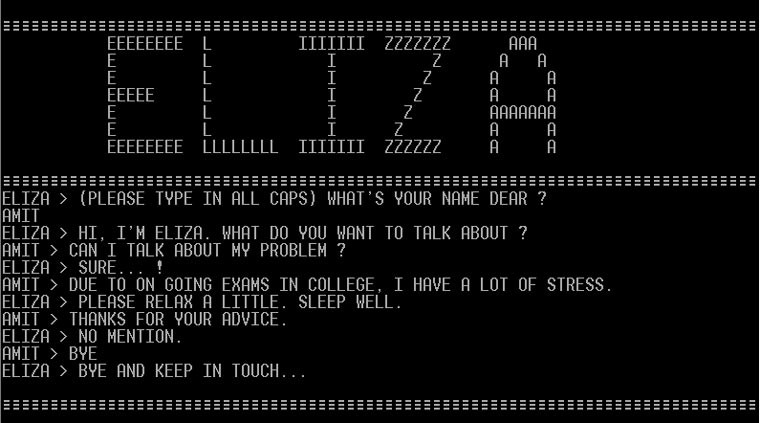

( ELIZA is the famous computer interlocutor program written by Joseph Weizenbaum in 1966 )

Timeline of the study and development of artificial intelligence

- 1943 - W. McCulloch and W. Pitts published scientific works, where they laid the foundations of the idea of artificial neural networks and proposed a model of a neuron created artificially.

- 1949 - D. Hebb described the principles of training groups of neurons.

- 1956 - The concept of AI is introduced.

- 1969 - Pipert and Minsky discovered and substantiated computational problems that were insurmountable at that time, arising in the creation of artificial neural networks. And interest in them for some time practically disappeared.

- 1950 - A. Turing's research, in a popular form - in the form of a test - showed the closeness of the intelligence of man and machine. A person and a robot communicate with another person using a teletype or chat. This person does not know who is who. If at the same time the robot chooses itself for a person, then it is the notorious AI.

- 1954 - Computational linguistics is born. The Georgetown experiment showed the possibilities of machine translation of texts. The experiment was described by all the major world media outlets. And despite the fact that only the most primitive texts could be translated, it was presented as a great scientific breakthrough.

- 1965 - Creation of the first expert system Dendral. According to IR, CM, NM - spectrometry data and data provided by the user, the AI produces a result in the form of a chemical structure. The expert system can reject unsuitable hypotheses and apply new ones. Another expert system MYCIN was created in 1970 and could recognize pathogenic bacteria, select antibiotics for their destruction with the calculation of dosages.

- 1966 - A computer program called Eliza is created that can carry on a conversation by posing as a human.

- 1969 - The beginning of the development of robotics, the creation of the first universal robot Freddie.

- 1970 - November 17 - landing on the lunar surface of "Lunokhod-1", a self-propelled vehicle, controlled remotely, worked for 11 lunar days, having traveled 10 540 meters.

- 1970 - Creation of the MYCIN expert system, which analyzes the symptoms of infectious diseases of the blood and offers recommendations for treatment.

- 1971 - Creation of the Stanford robot, the first mobile robot to operate internally without human guidance.

- 1981 - Creation of industrial robots with microprocessor control and advanced sensor systems.

- 1982 - The return of interest to neural networks and the creation of a network with two-way information transfer (Hopfield network).

- 1982 Development of the first speech recognition system begins.

- 1993 - A robot guide works successfully at the Massachusetts Institute of Technology.

- 1997 - DeepBlue computer plays chess with Garry Kasparov and wins.

- 1999 - Sony Aibo, a domestic robotic dog, is introduced. After 7 years, the project, which did not become sensational, was closed, but in 2017 the developers returned to it.

- 2009 - Creation of the search engine WorframAlpha, which can recognize natural speech queries.

- 2010 - The use of AI in applications and devices for the consumer. Huge databases were a breakthrough in AI training, and new efficient algorithms for training neutron networks were created.

- 2017 - 34 employees of insurance company FukokuMutualInsurance were replaced with one computer.

- 2017 - Recommended AI on Amazon makes 40% of sales by evaluating products that customers are more likely to buy

However, the main problem of such systems is not the complexity of information processing and the search for the most optimal ways to solve the assigned tasks, but the ability to think and feel in the broad sense of the word. The first developments in this direction appeared with the development of neural networks, which make it possible to establish changing connections between various events and phenomena, like neurons in the brain, only working thousands of times faster. The downside of such a neural network is the inability to program them, they must learn from their own experience.

Applications

AI is used in various fields of human activity. This includes the chemical industry, linguistics, medicine, robotics, and manufacturing.

( Conveyor consisting of only robotic manipulators, controlled by a single assembly program )

Artificial intelligence is widely used to create training systems for the natural translation of human speech into other languages, in search engines. After the creation and training of a full-fledged AI, control of all production processes of the planet will be transferred to it, and people will only have creative missions.

Artificial Intelligence in cinema

Since the origins of AI, filmmakers and artists have described the world of the future as a world where AI competes with humans, and humans do not always win in the struggle.

( Artificial intelligence of SkyNet from CyberdyneSystems, the film "Terminator" )

1968 - "A Space Odyssey" - a film by S. Kubrick, in which the AI face of the HEL-9000 onboard computer, instead of helping the crew of the ship, started a riot. And the theme of the riot of machines was picked up by thousands of followers.

1984 - SkyNet from Cyberdyne Systems - AI, opposing the Terminator in Cameron's films, spontaneously gained free will, and sent it to the destruction of humanity. Did the gift of foresight work in the plot?

( A program created by AI, simulating the human world from the movie "The Matrix ")

1999 - The Wachowski brothers created the famous "Matrix", where AI created a fake world for people.

2005 - "The Hitchhiker's Guide to the Galaxy" - a film in which AI appears in the form of a supercomputer, the size of a planet, looking for an answer to the main question of life.

The answer was found, but did the representatives of humanity like it?

2014 - "Transcendence" - a film about AI that has collected all the knowledge accumulated by humanity.

Humanity has long dreamed of real AI and at the same time is very afraid of it.

Artificial Intelligence - Human and Ethics

The development of any new technology must solve the control problem. The owner of the technology should have maximum opportunities, but at the same time the decision-making field of the machine itself should be narrowed to a minimum. An ideal machine should be helpless in the absence of a person, since from the point of view of a person, a machine should increase the person's ability to control.

As early as 1940, the principles of human-machine interaction were formulated by A. Azimov in the “Three Laws of Robotics”. Should an evolved AI have the same limitations?

The main characteristics of a conscious being, according to professors N. Bostrom and E. Yudkovski, are sensitivity, the ability to suffer, self-awareness and reflection. This is not exactly what the owners and developers are talking about.

Intelligence, by its very nature, cannot be controlled. Nevertheless, people from all different circles are discussing scenarios for the uprising of machines, and inventors are striving to create an extremely smart, but also extremely "benevolent" machine, the actions of which are easy to control and predict. And this contradiction has no solutions yet.

Artificial Intelligence Facts

AI is getting stronger, but it is far from perfect. Prediction and recognition errors allow significant errors, although they are much smaller than in humans.

And while people are discussing how ethical AI decisions are, another event unnoticed happened: the AlphaGo program beat a person - in Go with a score of 5: 0.

Go is the last logical game where abstract thinking of a person was considered an absolute advantage. Many moves in this game are made on the basis of intuition, and in fact it practically does not fit into the algorithm.

But the AI was able to.

No comments: